[Recorded live on 06/06/2020]

What's this session all about?

This session features Senior Solutions Engineer David Olaniyan and Lead Technical Consultant Daniel Harris from our solutions engineering team discussing the new Bot Framework SDK for .Net. After defining basic terms and elements that are relevant to Bot Frameworks, they discuss the capabilities, limitations and some practical enterprise use cases as well as doing some live code demos.

It's amazing how easy it can be to create value from the MS Bot Framework SDK for Microsoft .Net - Watch the video or read the transcription below to find out more.

Topics Covered:

- Comparison of all the available Bot Frameworks

- Overview of key components of a Bot Framework

- Show the setup of a simple echobot and what is required to create it

- Demo of another simple bot and how to tweak the code for different outputs

Key Sections:

[4:37] What is a bot?

[7:04] Components of a conversational AI experience

[18:39] Development tools

[20:51] General concepts

[22:43] State, authentication, dialogs, LUIS, and publishing bots

[26:36] A demo on starting a bot project

Video transcription.

Chris: Hi, everyone. I'm Chris Futcher from Cavendish Wood. I’ll just tell you a little about the organisation before we start. Cavendish Wood is a full lifecycle digital transformation company. We help organisations in both public and private sectors define a digital strategy, implement methodology, culture change, and innovation. We also design and deliver enterprise solutions including physical and cloud infrastructure, application development, and systems integration.

As you probably guessed, we're strong advocates for utilising technology to solve business problems and create meaningful transformation within the enterprise. Today's topic is the new Bot Framework SDK from Microsoft.Net. Today's presenters are David Olaniyan and Dan Harris from our solutions engineering team. Dan is part of our senior management team and has been with the organisation for about six years now, so he's more or less part of the furniture. And David is a senior solutions developer and is currently working on our Department of Work and Pensions account team developing a large identity solution which we're currently engaged to deliver for them.

Both of them are senior technologists for Cavendish Wood and responsible for the delivery of innovations to all of our clients. They take an in-depth interest in all emerging technology most of which hopefully we'll cover over the next few months in our Tech Talk sessions. They're going to be covering a comparison of all the available bot frameworks around today, including costs, features and more. They’ll offer an overview of key components of bot frameworks. We're going to show the setup of a simple echo bot and what's required to create it. And then we're going to demo another simple bot and how to tweak the code for different outputs.

I want you to think about potential use cases in your organisations. We're starting to see more internal enterprise use cases as well as customer-facing solutions. I'm now going to play you a short video of an enterprise bot which was way ahead of its time.

Bot: Hello, IT.

Male: Something is wrong with my computer.

Bot: Have you tried turning it off and on again?

Male: All clear.

Bot: You're welcome, mate. Hello, IT.

Female: I've tried turning it off and on again and nothing happened.

Bot: Is it definitely plugged in?

Female: Let me take a look. Thanks very much.

Bot: You're welcome, mate.

Chris: Right. A great use case for a bot there. Without further ado, I'm going to hand it over to David. Thank you very much. David, over to you.

What is a bot?

David: All right. Thank you for that introduction, Chris. As Chris has mentioned early on, we're going to cover an introduction to bots, give us an overview of what bots are and how they can be useful to organisations. We're then going to focus on the Microsoft Bot Framework which is the solution developer in Microsoft. The first question that we need to address is what a bot is. Many years ago, each time I heard the word bot, the first thing that came to my mind is some automated response from a website where I'm trying to get in touch with a customer agent and I'm redirected to some automated response which sometimes gets me frustrated. But bots are way beyond this.

As a developer, there's a lot more that can be achieved with bots from being able to get personalised information to accessing different types of data. Bots can be really useful. And I think over the course of this presentation, you'll get to understand a little more of what bots are. The simplest definition is basically to define bot as a software application which can run operations autonomously. And you can communicate with it and then it can communicate back with you as a user.

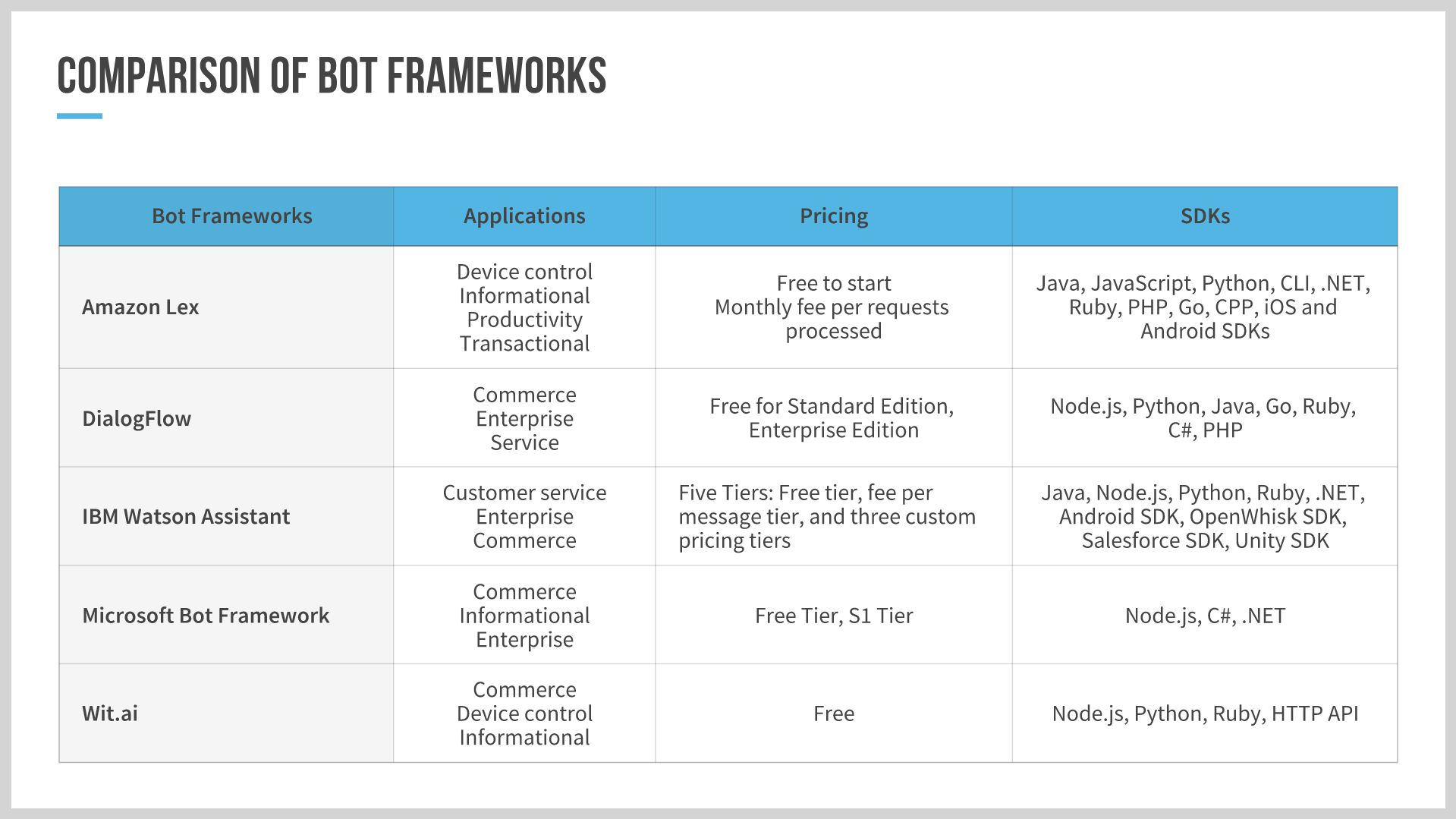

Going forward, we'll want to compare the different bot frameworks we have. We have dozens of bots and bot SDKs which are developed by different companies from leading tech giants to some open source projects. In this case, we've only highlighted a few of them. And we have Amazon Lex which is built on the same framework that supports Alexa which we are all familiar with. A lot of these companies have been using some of these technologies in-house, but have currently made it easy for developers to build custom solutions using these frameworks.

For this presentation, we'll focus on the Microsoft Bot Framework which has more focus on enterprise because Microsoft has a lot more strength in that. And we have a free pricing tier so it's easy for developers to pick this up and learn without having to incur any significant cost. Then we have support for Node.js and the C-Sharp.Net programming language. Finally, I will hand it over to Dan who is going to walk us through some different components that make up the services that power the Microsoft Bot Framework.

Components of a conversational AI experience.

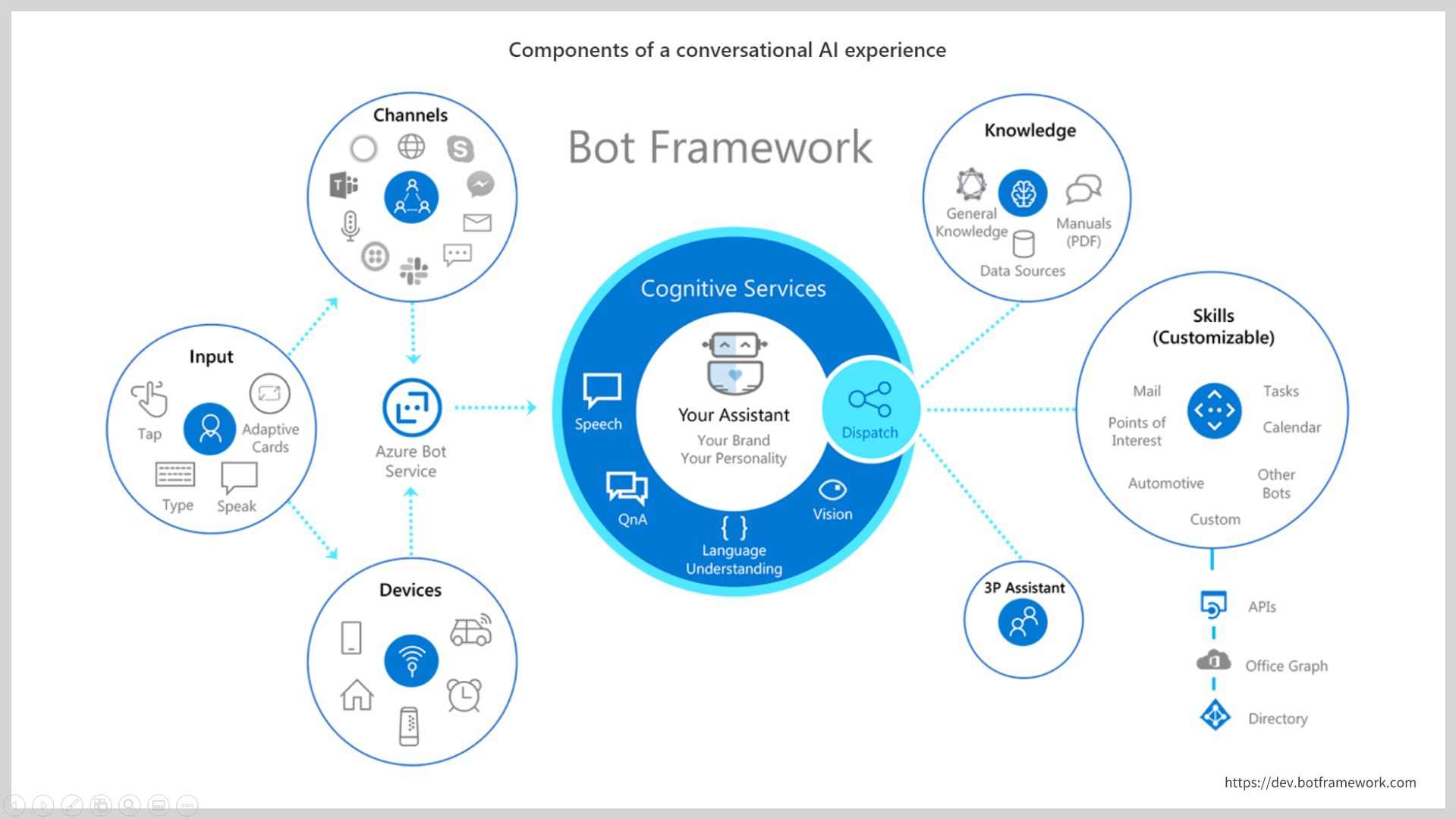

Dan: Thanks, David. As David was saying, these are the components that would make up typical bot framework bots. We're talking in the context of the Microsoft framework here but many of the concepts would apply to some other frameworks that David had up on the screen before. They may have different names, so much as Azure and AWS similar services with different names against them.

The first thing we want to talk about is the inputs on the left-hand side. This is one of the things that makes bots really versatile, inclusive, and accessible for a wide range of input methods that they support. When developed correctly, it should be possible for anybody to use your bot effectively. This wide range of inputs allows a user to interact in the way they prefer based on their current situation. It can also vary person-to-person across regions or age groups. Everyone has a preference for how they like to interact with technology. Some people are still quite shy about talking to the phone especially in public. They probably opt for the typing option at that point.

To initiate any of those inputs, you need a device to accept that input. This device wheel on the bottom shows just some devices that you might interact with a bot from. You can see most of these are devices that a user would interact with. It's probably important to point out as well that these don't have to be devices with user input. They could be an IoT device sending data from, you know, a sensor taking in info from the real world.

One example which I think is quite interesting on this slide is the car. Car manufacturers are starting to put a bot at the heart of their infotainment system, a system that gives them info about the car, with highly specialised systems that can give you alerts at the appropriate time and even just simple things like navigating your music. By building their own bots, they can customise it to their specific vehicle.

David: And I think one of the exciting things about that is also how it makes it easy to build accessible applications. It's easy to build a bridge between people who may be able to type and view on the screen and people who may not be able to do this and would need some kind of audio interface to connect with a bot.

Dan: You want to implement every input with every device. In a car, typing is quite cumbersome. It can be dangerous. Voice, adaptive cards and touch are suitable for a car. On a phone, you're probably going to implement most of these inputs to get the best user experience. Just moving on to channels on the top of this slide on the left. The bot framework supports a wide number of channels. And a channel is a location where you may interact with a bot form.

Some common channels that you can see there, things that a lot of us use every day include messaging platforms such as Skype, Team, Slack. You can also include more traditional technologies such as SMS that might sound a bit low-tech to people. But SMS could be useful if you want your bot to work in emerging markets or you expect it to be used in areas with a poor data signal which still does exist in a lot of parts of the world.

The bot framework makes it easy to integrate with multiple channels at once with very little effort and maximum reuse of your code. You can build this bot once and then get maximum reach by deploying across all the relevant channels that the framework supports. There are two types of channels that Microsoft has. They are standard and premium. In the free tier, you can send unlimited messages to the standard channels. You might be surprised to learn that most channels do come on to that standard tier including Team, Slack, and Facebook Messenger. You can do quite a lot with this framework for very little cost.

I'll just move on now to the cognitive services in the middle. This is where bots get a little more interesting because these services are what give the bot its intelligence, its ability to interact in a natural, almost human-like way. What I think is great about these cognitive services built on Azure is they really do democratise AI and machine learning. They allow all developers to build intelligence into their applications and their bots.

Previously, it could take years to develop these technologies in-house. Now they're at the end of a simple API call for absolutely anybody to use. It could be a hobbyist learning in their spare time right up to enterprises who don't want to invest in building their own internal AI and machine learning platforms. The language understanding service which you can see at the bottom of that wheel, this is important and it's what allows the bot to interpret natural language input and then determine the specific intent of the user. It's going to be a basic example.

If I were to say, "Ping a text over to David," that's the same thing as, "Send David an SMS." The language understanding service takes both of those inputs and turns them into what you could consider a strongly-typed action or intent there that the bot can understand and then execute the relevant action regardless of which phrase the user chose to use at that time.

Next just on the top right, we have a quick talk around the knowledge section. Combined with things which show us the QNA cognitive service, you can very quickly build a bot that will answer questions that a user may pose to it. So this can make use of structured data such as HTML. You can give the QnA Maker a link to an FAQ page on your website. It'll build up a bank of questions and answers and the language understanding model needed to interpret user input. That language understanding model can get smarter through use as well. It'll continually be learning.

David: I think one of the things I find interesting about the QnA Maker services, the fact that you could take a raw PDF document that was probably created 20 years ago and you could have it uploaded to the services and it would intelligently determine the headings and then the subheadings of the document based on how bold the text is. And then it's able to structure this in a way that it's able to serve users with very specific queries to get access to some information. So there's no need to have to flip through to page 148, sections 5 and 2. But with just a simple question, the bot can take this existing data to serve a response to the user.

Dan: Yeah.

David: I think that's very interesting.

Dan: It's pretty amazing to see all those services the Vision API uses to scan those documents, language understanding. Seeing those all come together to do something like that is pretty amazing. And we’ll cover that in a future video. Moving on to the skills now, I suppose the last part we're going to cover on this slide, you can think of a skill almost as a self-contained bot that performs a specific set of reusable actions. I think that is similar to how we as humans tend to have unique skills and expertise, bots can too. So you may end up developing multiple bot skills and then connect them to form almost like a super team of bots.

One simple example is you could build a skill that handles customer retention. It's designed to be able to take a customer who wants to leave your company and persuade them to stay. It may have access to offers available to that user. And then a general customer service web chatbot which would be considered the root bot, one that detects that a user is a bit disgruntled, or they want to leave the service. It'll call on the expertise of that bot and try to retain the customer.

And just another, maybe it's a bit more of a geeky kind of example, is if you think of Tony Stark's assistant in "Iron Man." Rather than that being a single assistant that can do everything, if that was built on the bot framework, you'd have a weapons expert skill, a medic skill, a tactic skill, and the central bot would call out to the expertise via those skills.

And you can see some of the out-of-the-box skills that they provide there, such as email, calendar, and tasks. These allow you to quickly integrate. You have your own enterprise bots you want integrating with Office 365 to add appointments to a calendar. Rather than coding that from scratch, you could call that built-in calendar skill. And at the moment, it is focused on 365 but Microsoft did state that they are expanding the mail and calendar to include the Google APIs soon as well. They are currently in the works. They have a self-contained bot which a user can interact with directly or can be accessed via another bot. This is where you're going to create your custom skills that interact with your internal systems.

David: All right. I think this is a very interesting overview of all the different possibilities. And it's interesting to know that there's no one way to do this. This can be implemented in many ways. It could be for an organisation to have a bot that's able to get the summary of sales reports for the first quarter and then the bot would intelligently go compute this and return the response which would make it easier than having to choose different program filters from an application.

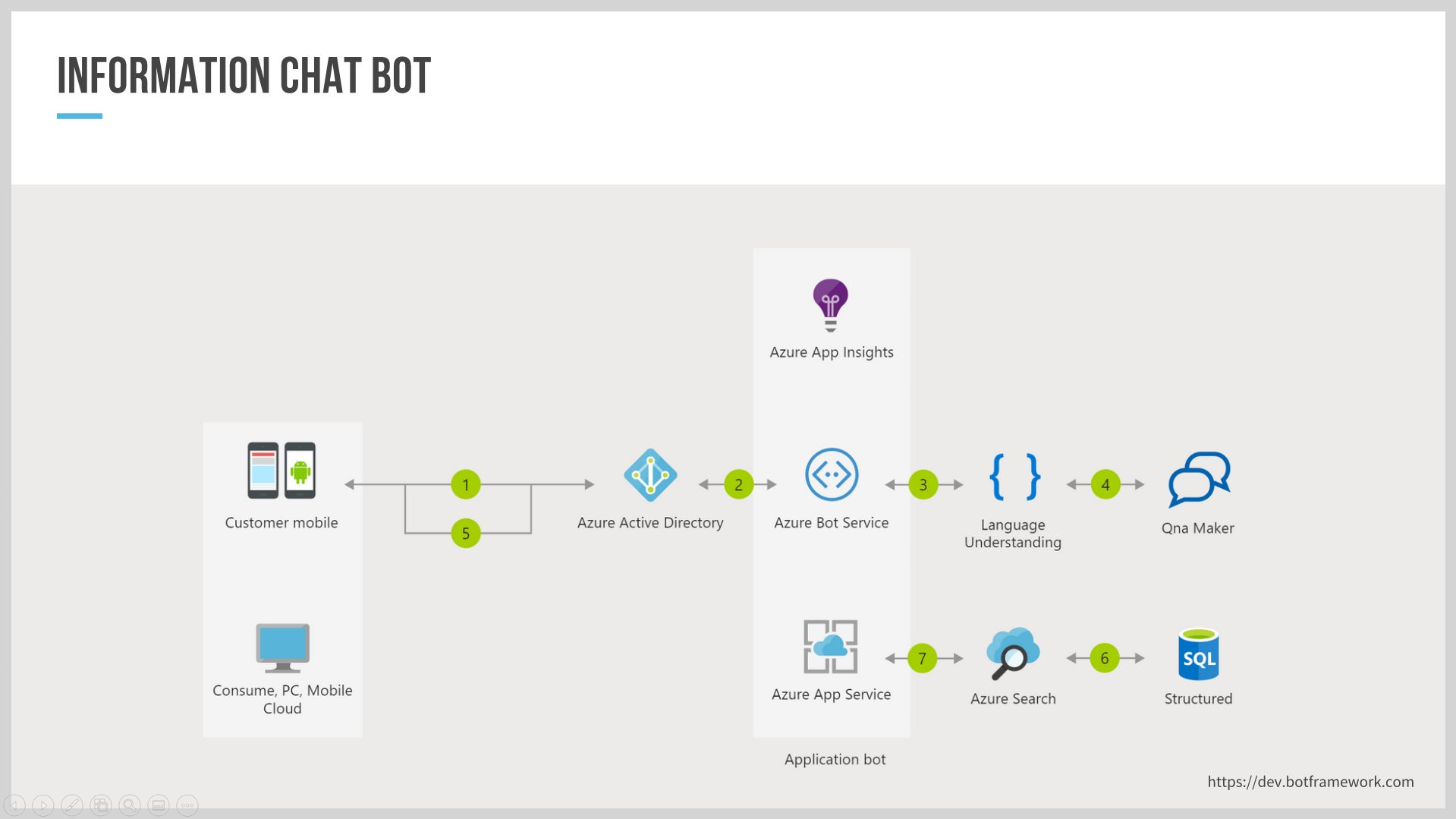

This will take us on to the next screen which I think I skipped which would help us have just an insight into what the overview of the architecture of a bot looks like. Here we have a client which should be a mobile app or one of the other devices that Dan just mentioned early on. We have the Azure, like, bot service which is an API project that secured deployed on Microsoft Azure.

This service can communicate with other third-party services depending on what the function of the bot is and get some response back to the user. And for most of the development, you would do, it's more or less like building an API project. But the special library that helped to handle the communication between the users and manage all the states and the different things that are needed to personalise the service.

Development tools.

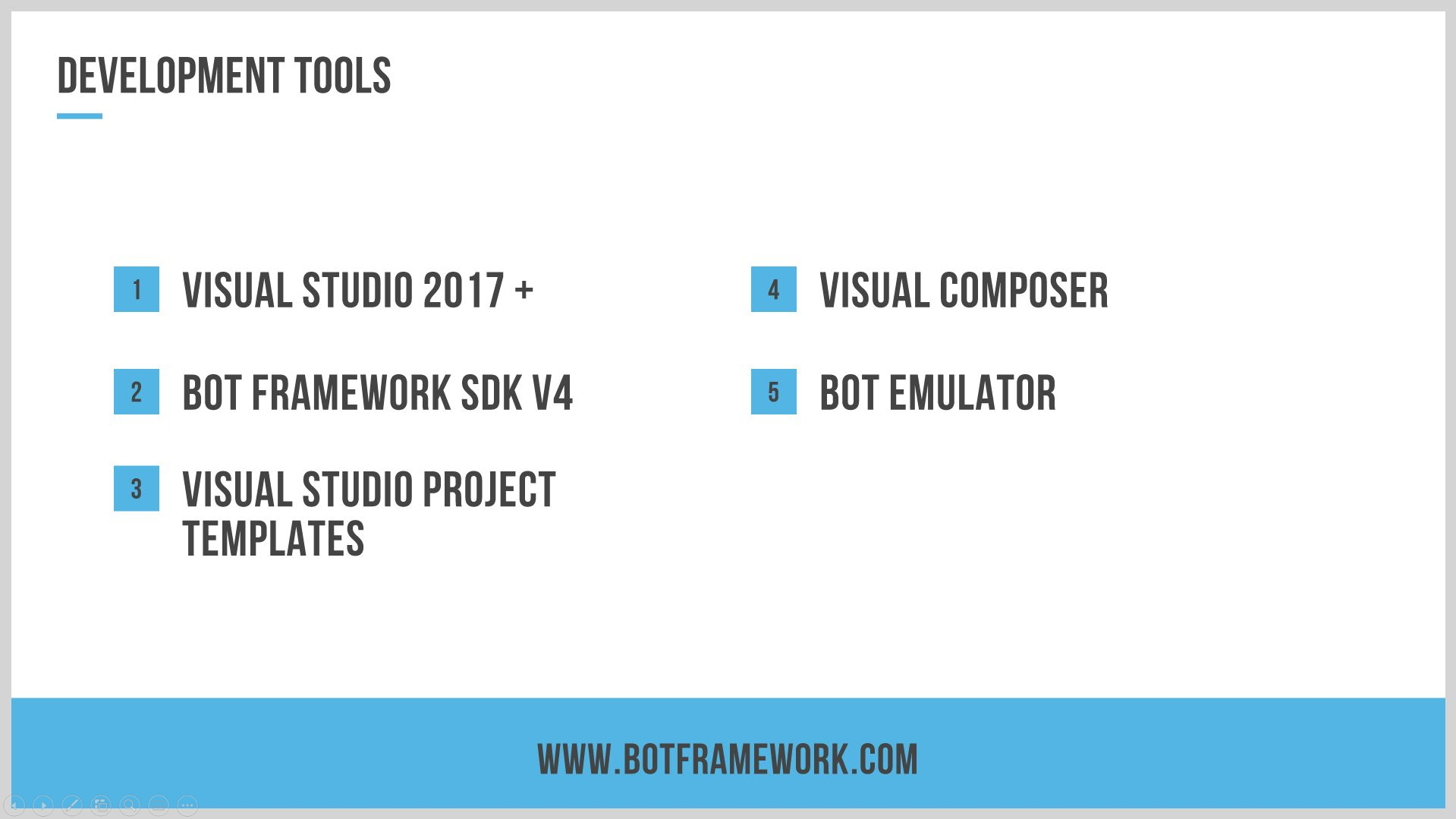

Before we go into the development, the tools we would need to build a bot using the Microsoft Bot Framework are Visual Studio. To build on the Version 4 of the framework, it's recommended that you use Visual Studio 2017 v4. And you would need to also install the bot framework SDK. And there are Visual Studio templates which you also need to install. These are boilerplate templates you could use to kick off a project with some very minimal code that would help to guide your learning or development process as you go on.

There's an interesting tool called Visual Composer. This helps you to start creating a bot without writing a line of code. And it's very friendly in such a way that you can compose the different sections and branches and connections of conversations in your bots. After you have done some work here, it's easy to download the source codes of the project and continue your development writing actual code if you wish to do so. Then there's a bot emulator which is a client that you would use to test your bot during development. Most bots end up getting deployed to either Cortana or Alexa or could have Microsoft Teams as a channel. But then while you're developing, you would need a way to test. With the bot emulator, you will be able to do this.

Dan: I think the Visual Composer is something that people should take a look at because I know it was one of the complaints from earlier versions of the framework was there was no visual tooling to build bots. It's great for if you want to get a demo up and running quickly to do something internally. I just love that you can then take the code and continue with that and do full development on it.

General concepts.

David: I think the whole idea is just to make this as easy as possible. So developers can focus on solving business problems instead of having to get into becoming deep learning experts. This is our general concept about bots. Just before we take a look at some code, it would be important to highlight some of this. There's something called an activity which represents some type of information that is passed over between one party and the other in a conversation. And there are different types of activities that I would also showcase in the next screen.

And a turn is the actual movement of information. Think of it like the packaged information that would include the message and then the receiver and then the sender. And then the turn context is the middle where that manages the sending and receiving of requests. And then this just makes it much easier from the code development side which we would get into more details on in the next couple of slides.

These are 15 different activity types that we have currently in version 4 of the bot framework. And as we can see there are more things than the actual message that moves between the user and the bot or vice versa.

And in this case, we have things in the message itself. And then when a message is deleted, there's also some form of notification that is sent over to your project and you're able to make the necessary decision. The typing is also a type of activity which is something I think some of us would be familiar with. When you use Teams, you would have some feedback that lets you know the other user is typing.

State, authentication, dialogs, LUIS, and publishing bots.

I will mostly be focusing on just a couple of these and the code that I showcase would do. But this is just to give you some insights that there are lots of different activities you could gain access to using the bot framework. I'm just going to mention some terms that we will not be covering in this project but just to highlight how useful they can be. There's a state which helps you to manage states for your bots because, at the end of the day, your bots would need to be able to remember certain things in the conversation.

Communicating with a bot is more than filling a form and signing up to join a website or a secured application, but there's need to have some context after having some conversation or after getting some data from you. And that's what the state does. It helps you manage how that information is saved. And it could either be in a temporary memory notation or a mock assistant data store.

Authentication bots also need security. There are ways to implement open authentication protocols to secure your application. And then we have dialogues which are ways of managing more complex conversations. The idea is basically to be able to carefully know which things you have answered and transit you from one conversation to the other based on the progress you have made while communicating with a bot.

Dan: I think one quick example of using states to enhance the user experience, a real-world example that a lot of us would have experienced is if you were to ask Google Assistant a simple question, "Who is the prime minister of the United Kingdom?" it would say, "Boris Johnson." But then if you wanted to find out how old he was, rather than saying, "How old is Boris Johnson?" you can just reply with, "How old is he?" and that state allows the Google Assistant to track the fact that you're still talking about Boris Johnson. It can make a judgment call and say, "This person probably wants to know the age of Boris Johnson." That's just a nicer user experience than having to make a new statement every time you want some information.

David: I think that's true. And at the end of the day, I think user experience is key. People have had very terrible experiences with bots and that's why for most people who have a bad experience with a bot the first time, it's very hard to trust it subsequently. That's why using things to be able to understand what the user has and improve the experience in some way is very useful.

And we have LUIS. I won't say much about this because Dan talked about it early on. It's a way to have your bots help understand some different ways of saying the same thing and provide strongly-typed outputs that your code can understand and you can make the right decisions based on that. Then also, we would definitely need to have a bot published.

A demo on starting a bot project.

Dan: It's all well and good developing an amazing new application or a new bot. But if it's not published somewhere that people can access it, then it's useless. You would publish all bots on the Microsoft bot service on Azure. Once it's there, this is where you would then integrate with numerous channels which in most cases, is as simple as pasting in the app secret key from the third party service.

When it comes to building and testing your bot there's no need to keep deploying back to the Azure service just to check a small change. The bot emulator uses a tool called ngrock or ngrock to create a secure tunnel to the locally-hosted API on your machine. This is also useful for testing webhooks with Microsoft Teams and things like that. David is now going to walk us through creating, testing, and publishing a very simple echo bot project that can respond to both text and speech input.

We're going to show you the text input in the emulator, and then we're going to show a speech interruption via the Cortana virtual assistant. Just to set expectations an echo bot will simply respond with what it heard the user say. We will be running future sections that will go into a lot more detail, and we'll start to connect to the cognitive services. But for now, we just want to give a simple example of how you can get up and running on some very basic concepts. I'll hand it over to David to run us through that.

David: All right. Thank you, Dan. I have Visual Studio up on my screen. Dan, can you confirm if you can see?

Dan: It's going through clearly.

David: All right. Like I've mentioned before, a bot project is mostly an API project and I will show that to us. But the core thing we want to do here is just to see how quickly we can create a project using one of the templates and change the default that we have and do some publish for that and see what the output looks like. I will hit the Create a New Project button, and we will find out here that I have templates for the AI bot in this drop-down which I would only have when I have the SDK installed. And I also have the Visual Studio template that I mentioned early on.

we have three different types of bots templates included here. And the first one I will talk about is the call bot. And the call bot is mostly a more detailed template. It includes a lot of additional code which would help you understand how you could implement LUIS in a custom way, which is a language understanding service. And also things like being able to say, "Book me a flight from London to Manchester," for instance, LUIS can get what London is, what Manchester is, and then get you some response or book the ticket for you based on that.

There's also a lot more into the implementation of dialogues and some things I mentioned we may not necessarily cover here today. And we also have an empty bot template. And an empty bot is a blank project that you could use to start up your project if you know exactly what you're doing and don't need any of the samples. And we have the echo bot which is something in between.

The echo bot basically takes in inputs and then receives the text from the user and immediately packages the same text and sends it back to the user. That's why it's called an echo bot. So whatever text you give in, it's going to put that back to you instantly. And I'm going to start a new project using the .Net Core 3.1 which is the latest...which is a more recent version of .Net Core. And the name of my project, I'll leave it as a default and hit the create button. Once the project comes up, we will find out that it's no different from most of your API projects for people who are familiar with development using ASP.Net Core.

Yes, we had just some simple code here. I will view this in the browser, so we can see what this looks like and then come back to explain a couple of things that make this work. And while that is coming up, I would show us the bot emulator which is what I mentioned early on. You need to use this to test your bots during development so you don't have to wait until you have it deployed to, like, Microsoft Azure or when you have it ready to use in Microsoft Teams. The bot emulator works just like a chat client and you can open a new bot by hitting this button and providing the URL of your bot. So our bot is ready.

This is just a fancy page to show you that the service is running. The very important value we need here is the endpoint of the bots which is shown here. And we need this to connect to the bot from any of the clients we are working with. I would right-click and copy this and come over to the bot emulator and input that URL here. This will allow me to connect to the bot and then I can interact with it as a client. These fields would need to be filled if your bot is published to Azure already. And this is basically to provide some form of security over your bot which is something that is taken care of by the Azure bot service.

I will hit the connect button. And I'm connected to the bot. This is the chat window and this is just like every other instant messaging tool that we might be familiar with. There's a hello and welcome message. And I'm just going to enter the text, "Hello world." And I get a response back and it just says, "Echo, hello world." And that's simply how this bot project works. I'm going to go back to the code and show us what makes this possible. Back to here.

Here we have, in our controllers, we have the bot controller, and we can see the API message’s endpoint is the same one that we copied and pasted. This is the endpoint we're hitting. We have just one method here, which is what receives and sends messages for our bot. And we have two different injected objects here. One is the adapter and another one is the bot. And we pass in the bot into a method here that is called processor sync. What the adapter does here is to take care of all the complex work involved in receiving the response, processing it, and then sending the response back to the user.

There's a lot of middleware code that helps us to take care of a lot of complexity there. But what we need to take a look at here is the actual bot code which is the brainwork of our bot and is where we configure things like received messages and activities and turns that I talked about early on. And just like most of us who are familiar with how dependency injection works, the iBot implementation is here and the echo bot.cs file which is in the bot folder.

Here we have two implemented methods in this class. And one is the OnMessageActivityAsync. The other one is OnMembersActivityAsync. I listed 15 different possible activities and each of them almost have a way you can retrieve some response or captures an event that happens when they are triggered. But in this case, we have OnMessageActivity which is triggered when a message is received. And as we can see, there are two different parameters here. One is the turnContext and then the other one is the CancellationToken.

The turnContext contains all the packages of information that are coming in through the requests or through whatever input method a user is using. As we can see here, we're able to pull out the text coming from the user by saying turnContext.Activity.Text," and what this simply does is it concatenates the word echo together with whatever input text comes in. Then we use a method in the turnContext that is called SendActivityAsync to send a message back to the user. And we compose a response using MessageFactory.Text and the two parameters here are the same as the reply text we have.

This first one, if it's a text output like a chat window, this is the text that will be displayed. And the parameter here represents the speak response which would be if you're using a platform like Cortana or Alexa, whatever you want the bots to read out is what you need to include here. And the cancellation Token is a way you could, ..., you would need that to know when the bots need to be cancelled.

Moving on to show us the OnMembersAddedAsync, this is triggered when a user is added to the conversation. It also has two parameters. The MembersAdded, the turnContext, and the cancellationToken. What this does is it looks through the list of members and makes sure that the member is not the bot. And in this case, our bot is going to be the turnContext.Activity.Recipient. We just make sure that it's not a bot, and we send a welcome message to any user who is not a bot. And that is why I got a "Hello, and welcome," in the chats I tested.

Dan: It's worth briefly calling out. You can see there the OnMemberAddedAsynch method takes a list of channel accounts. You can see where the bot framework is abstract in a way the specific implementation details of the channel. This could be coming from the emulator. It could be coming from Teams or Slack. It's just a very generic event and you don't need to worry about that specific implementation. You just need to know that a member was added to the conversation regardless of channel.

David: I'm going to do a quick publish now. Thank you, Dan. That's just to prove how easy it is to aggregate different channels and provide access to the same resource by the end of the day. I'm just going to do a quick publish of our bot. And I have already set up a service on Azure that I'm going to show you. Here I am going to select an existing user profile, an existing published profile, which is connected to the bot service I have set up on Azure. Here we go. And then I'm going to publish this bot and I will just quickly take us to Azure to take a look at what the bot service looks like from the Azure side.

Dan: Dave, I think we just need to use the web deploy version. I think we have an issue.

Dave: Just selected that now. Thank you. Just to double-check that it’s self-contained. I'm going to initiate the publish now. And while we do that, I'm going to go over to the Microsoft Azure portal and I'm just going to dig through down to where the bot service we're publishing now is hosted. The support for configuring how a bot connects to different channels that we mentioned early on, this is where we can find and configure that.

This screen is quite similar to what we saw when reviewing the service on after creating the bot service locally. And we find that there's a testing web chat option here which is just similar to what we have in the bot emulator. I'll click on the Channels tab and that's going to allow us to see the list of channels that our bot is currently connected to. I have two issues here on my bot, but I guess this is just because of the development. But I think this is running fine.

We have four channels already connected to this specific bot service, and we have more channels here like Alexa and several other options that we could easily connect our bot to with just a few clicks of the setup function here. And just to show us how the Cortana is configured, I'll click on Edit to show us some things that were included when it was being created. It's so easy to connect a bot with different channels and instantly be able to provide input. And what we do in bot service is basically to take that input platform, whatever type of operation we want to with the data that comes in. And please, if you have any questions, remember to pop it in the chat window while we wait for our bot to get published.

Dan: If you just look on that Channels page, one thing which I forgot to mention earlier was you saw the direct line was one of the channels. That's one of the premium channels. The other one is web chats. What the direct line API allows you to do is deeply integrate your applications whether it's a mobile app, a certain part of your interface on a device such as the car lets you integrate directly via a RESTful API call into your bot and send messages from anywhere that you may want to without necessarily using one of those built-in channels that are available.

David: It's a flexible way to create whatever you want. And like Dan mentioned early on, whether you're designing some IoT-based system that's similar to what Tony Stark used in "Avengers," if you have your own custom-built system and have the bot connected to that and then it's easy to do whatever you want to do and then pass some output back to the user.

Here we have the setup for a Cortana channel. Every channel would have some piece of information we need to set up. But in this case, we have what's called an invocation name which just represents what triggers my bot to respond in Cortana. There are many things I could say to Cortana "Remind me of something." But in this case, if I want to trigger this app, I have to say the words "Super bot," and then anything I say after that is passed into the botservice, and then I'm able to get a response back after that happens.

This is so easy to set up. You could get this up and running in a few minutes. At the end of the day, you just have to focus on solving problems within your organisation. I think our publish is still on but will soon be complete. Yes. It's also interesting to know besides Cortana, there's also Alexa which is probably more popular for end-users in some parts of the world. It's easy to also have your bot working in your Alexa and can exist in Alexa deployment.

Dan: You can see there that Cortana sees your bot as a skill. What we were going back to earlier, saying a skill is also a specialised bot, the performance and action. In this case, echo bot is not too fancy, but this could be. You could have some complex integrating with multiple systems within your organisation and Cortana just sees it as another skill. Similarly, you could build your own assistant if you wanted that would call out to these individual skills.

David: All right. Looks like echo bot is ready and live on Azure. And as we can see, we see something very similar to what we have when we view it locally. But before we have to wrap up the presentation, I would quickly launch Cortana on my device and have my Cortana account linked up with the client service we have here. And then I would say something to Cortana. I configure it using the super bot command and then say something and get some response back. So, "Tell super bot how are you."

Cortana: Super bot said you are how are you too.

David: This is still showing a deployment I made early on. But that's just a response from a bot I deployed early on which would give the response, like, "Super bot said you are this too." And that's just to show us how easy it is to have a bot deployed and implemented. But I think something must have gone wrong during my deployment because I have just the basic bots deployed here.

I'm going to try and publish one more time. But the key point here is that a bot can connect with my Cortana interface. And the same thing that I'm able to do from the bot emulator early on, I'm able to connect with it through the Cortana interface and then provide some form of input to my code and then my code can package that and send that back to me.

In subsequent webinar series, we'll be able to explore bots in more detail. And we'll be focusing on specific case studies where we can navigate through data, generate chats that represent data between January and March of sales figures for a fictitious company, and we can see how the bot responds for things like that for different organisations. I'm going to test the bot one more time. I'm going to hit the listen button on my device again and say, "Tell super bot how are you."

Cortana: Echo. Hey, tell super bot, how are you.

David: So it says echo. Hello.

Cortana: Echo and hello.

David: Okay. So as part of that, but that's just to show how we have a simple example of the echo goes back here. And I could change this to "Super bot," just the final item here, "Super bot thinks you are..." and then I'm going to concatenate the text and say, "Too." And then I'm just going to save this and publish. This should also just take a few seconds since I have done a publish early on. And we would see this framework when we hit Cortana up this time.

Mostly we get a text that says "Super bot says you are..." and then whatever I say to it and then say, "Too." I try to avoid saying something very nasty, so I don't get a nasty response back. This is the last piece before we wrap up the demo for today. Subsequently, things like IoT would be good to explore the business case and also have some more interesting demos that we could discuss in the next couple of webinars.

Dan: This one is just bizarre and it's a little taste, dipping the toes in the water. We've got numerous sessions planned. Details of those will follow shortly for those that are interested in learning more.

David: Here I'm going to say, "Tell super bot you are not smart."

Cortana: Super bot thinks you are not smart too.

David: There we have it. That's just based on the input text I've provided here. So depending on what your use case is in your organisation, you would be able to take in some input text. You could process and do whatever you want to do and then package them out but then send that back to the user. I'm just going to hand it over to Chris.

Chris: I hope you found it useful. Apologies that my video seems to be a bit poor. If you didn't know much about bot technology before, then hopefully, it's inspired you to think about some potential use cases in your organisation. If it has, we'd love to hear about them on any of our social channels, etc., or directly through the website or email. And if any questions pop into your head over the next few weeks or days, just get in touch. We'd love to hear more from you about what you're planning and whether or not you're going to be implementing some bots.